Semi-Dual Embedding based Latent Factor Model

Based on the following lectures

(1) “Recommendation System Design (2024-1)” by Prof. Ha Myung Park, Dept. of Artificial Intelligence. College of SW, Kookmin Univ.

(2) "Recommender System (2024-2)" by Prof. Hyun Sil Moon, Dept. of Data Science, The Grad. School, Kookmin Univ.

DRNet

- 문제 의식

- 기존 협업 필터링이 모델링하는 관계 유형

- 잠재요인 모형(Latent Factor Model): 사용자-아이템 관계 모델링, 개인화 추천 성능 우수 (ex.

NCF) - 아이템 기반 협업 필터링(User Free Model): 아이템-아이템 관계 모델링, 데이터 희소성 강건 (ex.

SLIM,FISM)

- 잠재요인 모형(Latent Factor Model): 사용자-아이템 관계 모델링, 개인화 추천 성능 우수 (ex.

- 어텐션 기반 히스토리 아이템 집계 방식 (ex.

NAIS)- 사용자가 과거에 더 선호한 아이템일수록 새로운 아이템 선택에 더 큰 영향력을 행사함

- 사용자의 선호 정도에 기반하여 집중도를 차등 부여하여 집계할 필요가 있음

- 기존 협업 필터링이 모델링하는 관계 유형

DRNet(DualRelationNet-work) : 사용자-아이템 매칭 함수와 아이템-아이템 매칭 함수를 병렬 학습하는 모형- Ji, D., Xiang, Z., & Li, Y.

(2020).

Dual relations network for collaborative filtering.

IEEE Access, 8, 109747-109757.

- Ji, D., Xiang, Z., & Li, Y.

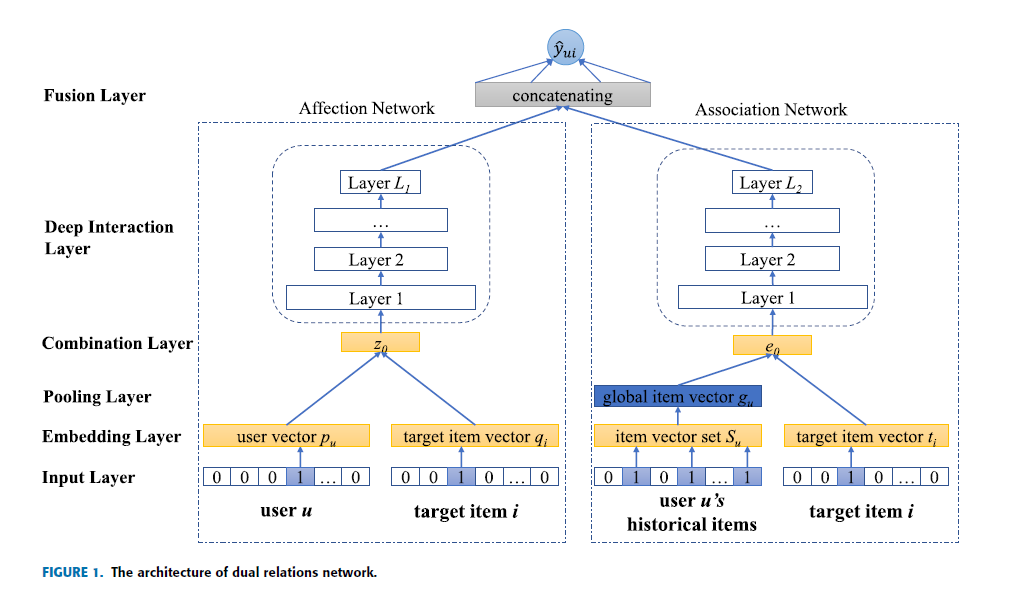

- Components

- Affection Network: Modeling User-Item Relation

- Association Network: Modeling Item-Item Relation

- Dual-Relation Network: Affection Network & Association Network Combination

Notation

- $u=1,2,\cdots,M$: user idx

- $i=1,2,\cdots,N$: item idx

- $\mathbf{Y} \in \mathbb{R}^{M \times N}$: user-item interaction matrix

- $\overrightarrow{\mathbf{u}}_{u} \in \mathbb{R}^{K}$: user id embedding vector @ affection network

- $\overrightarrow{\mathbf{v}}_{i} \in \mathbb{R}^{K}$: item id embedding vector @ affection network

- $\overrightarrow{\mathbf{p}}_{i} \in \mathbb{R}^{K}$: target item id embedding vector @ association network

- $\overrightarrow{\mathbf{q}}_{j} \in \mathbb{R}^{K}$: history item id embedding vector @ association network

- $\overrightarrow{\mathbf{z}}_{u,i}$: predictive vector of user $u$ and item $i$

- $\hat{y}_{u,i}$: interaction probability of user $u$ and item $i$

How to Modeling

-

Dual-Relation Network:

\[\begin{aligned} \hat{y}_{u,i} &= \sigma\left(\overrightarrow{\mathbf{w}} \cdot [\overrightarrow{\mathbf{z}}_{u,i}^{\text{(AFFECT)}} \oplus \overrightarrow{\mathbf{z}}_{u,i}^{\text{(ASSO)}}]\right) \end{aligned}\]

Affection Network

-

ID Embedding:

\[\begin{aligned} \overrightarrow{\mathbf{u}}_{u} &= \text{Emb}(u)\\ \overrightarrow{\mathbf{v}}_{i} &= \text{Emb}(i) \end{aligned}\] -

Predictive Vector of user $u$ and item $i$:

\[\begin{aligned} \overrightarrow{\mathbf{z}}_{u,i} &= \text{MLP}_{\text{ReLU}}(\overrightarrow{\mathbf{u}}_{u} \odot \overrightarrow{\mathbf{v}}_{i}) \end{aligned}\]

Association Network

-

ID Embedding:

\[\begin{aligned} \overrightarrow{\mathbf{p}}_{i} &= \text{Emb}(i)\\ \overrightarrow{\mathbf{q}}_{j} &= \text{Emb}(j) \end{aligned}\] -

Global Item Vector of User $u$:

\[\begin{aligned} \overrightarrow{\mathbf{x}}_{u} &= \text{ATTN}(\overrightarrow{\mathbf{h}},\text{Affection}(u,\forall j \in \mathcal{R}_{u}^{+} \setminus \{i\}), \mathbf{Q}[\forall j \in \mathcal{R}_{u}^{+} \setminus \{i\},:]) \end{aligned}\] -

Predictive Vector of user $u$ and item $i$:

\[\begin{aligned} \overrightarrow{\mathbf{z}}_{u,i} &= \text{MLP}_{\text{ReLU}}(\overrightarrow{\mathbf{x}}_{u} \odot \overrightarrow{\mathbf{p}}_{i}) \end{aligned}\]

How to Attention

-

Query Vector is Global Context Vector:

\[\begin{aligned} \overrightarrow{\mathbf{h}} \end{aligned}\] -

Key Vector is Generated by Affection Network:

\[\begin{aligned} \overrightarrow{\mathbf{z}}_{u,i}^{\text{(AFFECT)}} &= \text{MLP}_{\text{ReLU}}(\overrightarrow{\mathbf{u}}_{u} \odot \overrightarrow{\mathbf{v}}_{i}) \end{aligned}\] -

Global Item Vector of User $u$ is Generated by:

\[\begin{aligned} \overrightarrow{\mathbf{x}}_{u} &= \sum_{j \in \mathcal{R}_{u}^{+} \setminus \{i\}}{\alpha_{u,j} \cdot \overrightarrow{\mathbf{q}}_{j}} \end{aligned}\] -

Attention Weight is Calculated by Smoothed Softmax:

\[\begin{aligned} \alpha_{u,j} &= \frac{\exp{f(\overrightarrow{\mathbf{h}},\overrightarrow{\mathbf{z}}_{u,j}^{\text{(AFFECT)}})}}{\left[\sum_{j \in \mathcal{R}_{u}^{+} \setminus \{i\}}{\exp{f(\overrightarrow{\mathbf{h}},\overrightarrow{\mathbf{z}}_{u,j}^{\text{(AFFECT)}})}}\right]^{\beta}} \end{aligned}\]- $0 < \beta \le 1$: Smoothing Factor

-

Attention Score Function is Dot Product:

\[\begin{aligned} f(q,k) &= q \cdot k \end{aligned}\]

This post is licensed under

CC BY 4.0

by the author.